I’ve known about the API but I never had the opportunity to develop anything on top of it in my professional experience. With a new project requiring an overhaul of the existing framework, I thought I’d start off with the API because I’m actually building out the SDR from nearly scratch.

Essentially I’m starting with an audit of existing Report Suite configuration…eVars, props, custom events, products, list variables, and marketing processing logic. Then proceeding to audit the implementation in Adobe Launch client side, and then finally for the server-side event forwarding.

My goal for the project is to reduce the overall logic required in the tag manager to track Adobe Analytics and Customer Journey Analytics data. Basically reduce the volume of rules and data elements required to capture new events and data.

When implementing an analytics framework with or without a tag management system, much of it can be automated with a well constructed data model and event system. My preference is to develop a minimal amount of logic within the TMS so that it would require less support over time.

In my current role, inheriting a platform that was basically half-way there with the event driven data layer and TMS configuration. Given the volume of rules and data elements to support eight domains with more coming, manually auditing will be a pain and it’s well worth the time to develop a solution using the API.

If you’re not familiar, you can find more information on Adobe’s site https://experienceleague.adobe.com/docs/experience-platform/tags/api/overview.html?lang=en

What I’m trying to answer in this first pass:

- Audit eVars/props/success events

- Which are defined in the Report Suite config?

- Which are configured or not in Adobe Launch?

- Which are populated or not via Adobe Launch?

- Audit Adobe Launch Rules and Data Elements?

- Duplicate data elements

- Misconfigured data elements

- Do all rules have all applicable Data Elements mapped?

- Consent Configuration

- Audit all third party tags which do or do not have consent settings applied

I’ll end up repeating the process for the Event Forwarding container, but for now starting with the client-side container. This will help me determine how to configure the minimal number of rules required for AA and CJA tracking.

Where to start

The first thing you should do is familiarize yourself with the response data, and as usual the Adobe documentation is thin, so you’ll need to dig in yourself. I’m working in Python, and so I started with configuring requesting the access token, then moving onto requesting all the Rules, Rule Components, Data Elements, and associated Notes.

One by one I was familiarizing myself with the structure and composition, Adobe has kept it all very standardized. While some fields are all null, important fields like the Published Date are missing, overall the data is good quality.

Below you can see a basic flow of what my process looks like, I start with the access token, then retrieve all the rules via the Rules endpoint, and then the rule components using all the unique IDs from the rules response. Lastly I retrieve all related notes from both the rules and rule components responses, which contain links to the notes. Once everything has been retrieved and flattened, I begin to perform some cleanup and transformation, along with some calculations for testing.

I repeat the process for the data elements, with some tweaks in the processing for their one to many relationship with rules and rule components.

Every implementation will have it’s own details to focus on, but one we all share is the limit for 100 results per page. Having rules and data elements exceeding that, first things first is making sure to configure logic to check for the total volume of pages based on your request size.

Here’s a snippet sample, iterating through the page count then using json_normalize to flatten the data. Along with some cosmetics and adding the parent rule_id value. ‘rcData’ is the rule components response in a dataframe. The ‘meta’ object is on every successful response and contains the ‘pagination’ value so we know how much data to expect for the given request.

pageCount = rcData['meta'].get('pagination')['total_pages']

for _ in range(pageCount):

x = str(_+1)

rcUrl2 = "https://reactor.adobe.io/rules/"+rule_id+"/rule_components?page[size]=100&page[number]="+x

rcResponse2 = requests.get(rcUrl2, headers=rcHeaders)

rcData2 = rcResponse2.json()

rcdf2 = pd.json_normalize(data=rcData2, record_path='data')

rcdf2.columns = rcdf2.columns.str.replace('.', '_')

rcdf2['rule_id'] = rule_id

rcDf = pd.concat([rcDf, rcdf2])As you start bringing in more data, if you’re using the Adobe XDM Data Element Type, you’ll have noticed that the ‘attributes.settings’ object contains all the specific mappings of data elements or values to evars, props, and the rest of the Adobe Analytics related payloads (among all the other settings for rule components and data elements).

This is where the bulk of my auditing and review will take place for my current project, so I needed a way to flatten out the responses the same way repeatedly. This ends up being straightforward and a breeze with json_normalize.

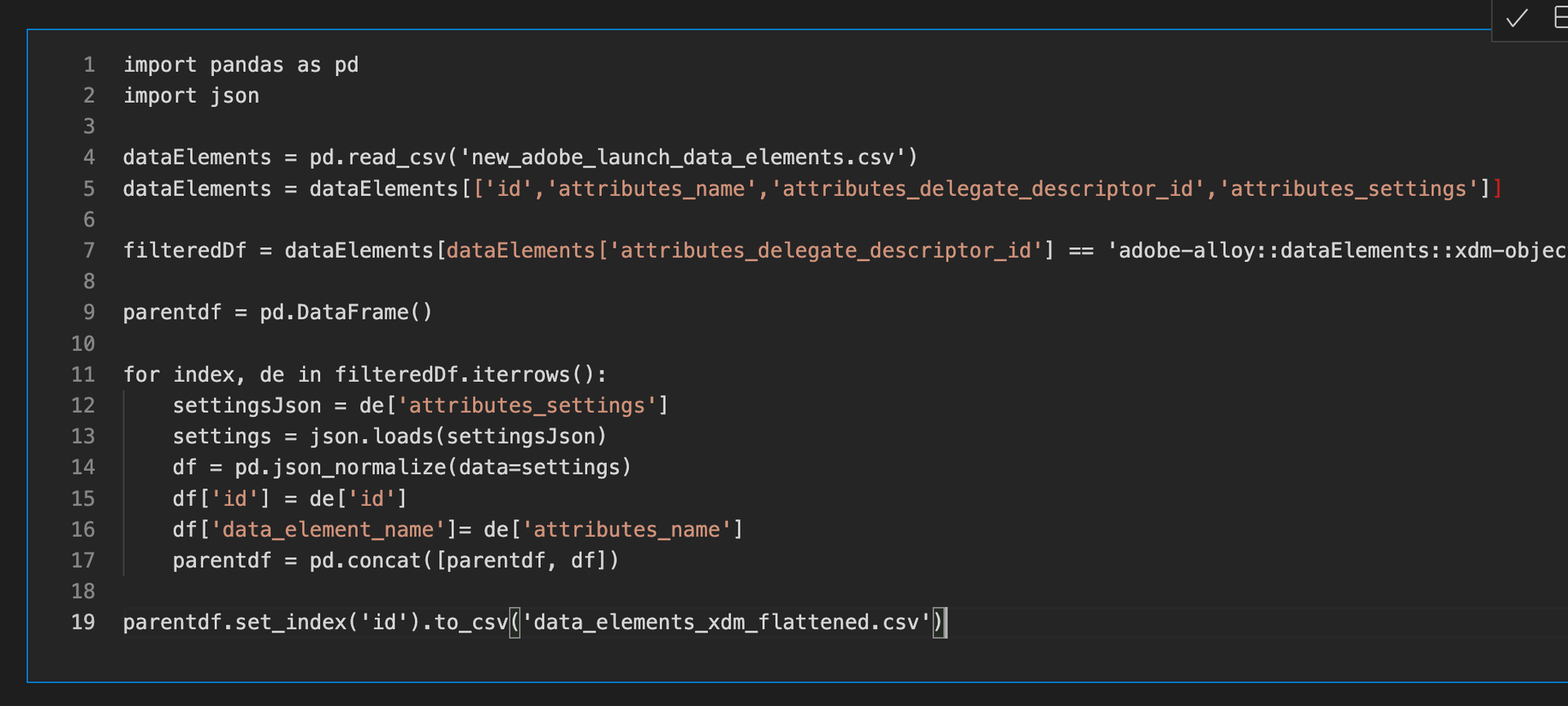

import pandas as pd

import json

dataElements = pd.read_csv('new_adobe_launch_data_elements.csv')

dataElements = dataElements[['id','attributes_name','attributes_delegate_descriptor_id','attributes_settings']]

filteredDf = dataElements[dataElements['attributes_delegate_descriptor_id'] == 'adobe-alloy::dataElements::xdm-object']

parentdf = pd.DataFrame()

for index, de in filteredDf.iterrows():

settingsJson = de['attributes_settings']

settings = json.loads(settingsJson)

df = pd.json_normalize(data=settings)

df['id'] = de['id']

df['data_element_name']= de['attributes_name']

parentdf = pd.concat([parentdf, df])

parentdf.set_index('id').to_csv('data_elements_xdm_flattened.csv')Now we’ve got a flattened table to work from to make our audits and comparisons easy to work with using SQL, Excel, Sheets, or any visualization tool. Before I publish, I will make some adjustments like cleaning up the column names and filling in missing eVar and prop columns associated with unused or unmapped variables.

Over time, we’ll be able to repeat the process in a similar fashion for the rest of API endpoints, adding support for other components, libraries, environments, builds, and then moving onto capturing Event Forwarding data after that.

This was a rushed introduction but once the project gets to the next phase, I’ll be posting more information and links to the GitHub project.

Note

What you may notice above is the “json.loads(settingsJson)”, when I filtered down to the delegate_descriptor_id, I knew that all the values would be in JSON string. When applying the json.loads() method to all data elements will result in an error because of invalid JSON. I haven’t located which entries are the cause of the error, instead focusing on one delegate_descriptor_id at a time and the expected format of the ‘settings’ object.